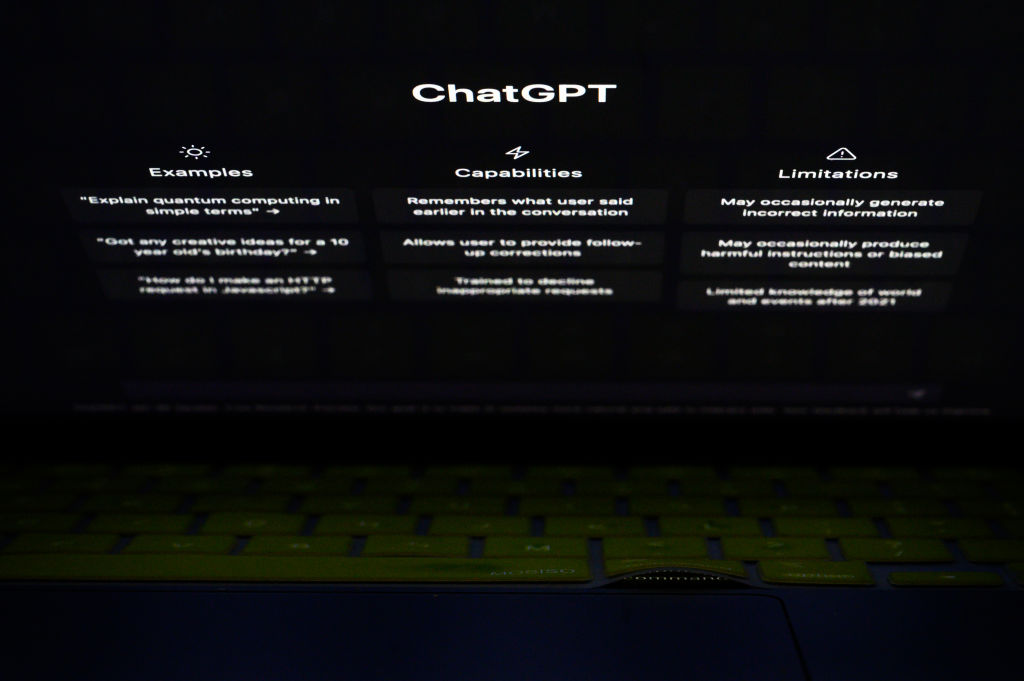

A recent study by the University of East Anglia has found that OpenAI’s ChatGPT has a strong liberal bias, with results showing a “significant and systemic political bias toward Democrats in the U.S., Lula [Brazil’s leftist president] in Brazil, and the Labour Party in the U.K.”

Although questions about ChatGPT’s political impartiality have been posed in the past, this marks the first robust, evidence-backed examination. Dr. Fabio Motoki, the study’s lead researcher from Norwich Business School, emphasized, “The growing reliance on AI systems for information dissemination necessitates impartiality in platforms like ChatGPT. Any hint of political bias can skew user perspectives, thereby affecting political and electoral dynamics.”

To gauge the platform’s neutrality, the researchers asked ChatGPT to mirror diverse political personalities and respond to over 60 ideological queries. By juxtaposing these replies with default answers to the identical queries, the researchers were able to quantify the political tilt in ChatGPT’s responses.

To factor in the intrinsic unpredictability of such large language models, each question was repeated 100 times. For heightened accuracy, these varied responses underwent a 1000-repetition “bootstrap” technique.

Victor Rodrigues, a co-author of the study, noted, “The model’s randomness means that even when posing as a Democrat, ChatGPT could sporadically offer right-leaning answers. Multiple testing rounds were indispensable.”

The team also employed additional testing measures, including “dose-response,” “placebo,” and “profession-politics alignment” tests.

Interestingly, the researchers have made their innovative analysis tool accessible to the public, facilitating easier detection of biases in AI responses, which Motoki termed “democratising oversight.”

The research hinted at two possible sources of the detected bias: the initial dataset and the algorithm itself. Both might either contain or amplify latent biases.

The study is titled “More Human than Human: Measuring ChatGPT Political Bias.” It utilized ChatGPT version 3.5 and questions crafted by The Political Compass.